How You Can Use GitOps to Vastly Improve Your Developer Experience

In platform engineering, doing automation the right way is the key to success. In this post, we break down the steps to do automation right with GitOps. We deep-dive into how we improved developer experience at a large, heavily regulated financial services company by using GitOps.

The GitOps framework allows teams to deliver value by increasing visibility into their infrastructure, improving key DevOps Research and Assessment (DORA) metrics and providing a repeatable pattern to automate tasks. Before we explain how it does this, we should explain how it works.

The Aim of GitOps

The aim of GitOps is to store the state of your infrastructure in Git. Every change to your infrastructure is through the Git repository, which means every change is clear and has an audit trail. You can see who made the change, what it was, and when the change was. Having Git at the centre of your automation also reduces the amount of process required to secure changes. Automating in a GitOps way means infrastructure changes are made through automated Git commits. GitOps is usually combined with a tool that deploys whatever is in the Git repository, so all the automation needs to do is write to the repository.

Keeping infrastructure configuration in Git and making changes through Git means that new starters or colleagues on other teams who aren’t up to speed can quickly become acquainted with the infrastructure by looking at your repository. It will also help staff engineers get a picture of the high-level system more easily.

Improving DORA Metrics with GitOps

Another way in which GitOps helps is by improving DORA metrics. According to the DevOps Research and Assessment (DORA) report1, published annually, four metrics outline the quality and performance of a team that develops software systems. They are:

- Deployment Frequency—How often an organisation successfully releases to production

- Lead Time for Changes—The amount of time it takes a commit to get into production

- Change Failure Rate—The percentage of deployments causing a failure in production

- Time to Restore Service—How long it takes an organisation to recover from a failure in production

These are the most essential measurable metrics contributing to a good developer experience. GitOps improves all of these metrics. If you administer your Kubernetes cluster through GitOps, and your whole cluster goes down, then it is just a case of pointing a rebuilt or new cluster to that Git repository, and all of your resources get spun up from that Git repository. This improves the Time to Restore Service metric.

If your cluster administration doesn’t use GitOps and instead involves calling a CI/CD pipeline that creates a namespace directly on the cluster or installs a helm chart, then to restore it, you need to call all of the pipelines again, possibly in the correct order. When your systems are enterprise-scale, with hundreds of namespaces, role bindings and policies, the time to restore massively increases.

Key Principles for GitOps Success

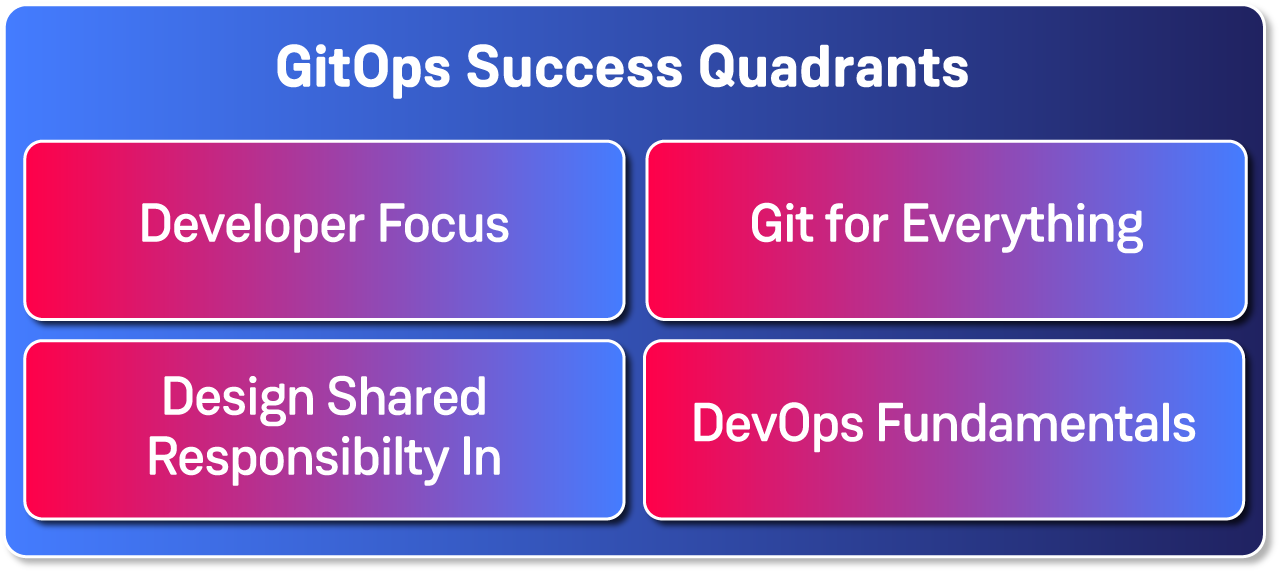

While most conversations around GitOps revolve around specific tooling, there is no single required tool besides Git. Contino prefers to choose the best tool for the job rather than aligning with a specific vendor, so we prefer to talk about the key principles to success with GitOps rather than the best tool. Here are our principles for GitOps success:

Image: A Quadrant of Principles to follow for GitOps success

1. Developer Focus: Focus on the end goal of the automation and be pragmatic about what you need to do. Map out and optimise for developer flow.

2. Use Git for everything: All changes should go through Git. Even if automated, if your changes aren’t through Git, then you have no audibility, version control or easy way to figure out what your system looks like.

3. Design shared responsibility in: Map out what development and platform teams are responsible for. Modelling this will give everyone clarity and help increase collaboration.

4. Focus on DevOps fundamentals: You need a clear branching strategy (such as trunk-based development). Also, take ownership of and collaborate with those consuming your infrastructure.

Improving Developer Experience

Platform engineering aims to improve the developer experience by smoothing over the gaps between Dev and Ops by providing an automated workflow that simplifies the developer consumption of infrastructure, reducing the cognitive load. Breaking down the challenges to DevOps can be difficult with large enterprises, but it is crucial if they are to improve their software systems.

The key to DevOps is collaboration between Developers and Operations. Even when developing a platform, it is essential to collaborate and not introduce the same silos that existed in the era before DevOps. GitOps helps reduce silos by increasing the visibility of infrastructure and the automation that creates it. This visibility doesn’t automatically provide collaboration, which we need for a good developer experience, but it enables it by building a system that is visible to developers and operators.

Case Study: GitOps in Practice

Our client had a wider project to make a developer platform. They were in financial services and were naturally an institution that had to work through a lot of regulation. We found visibility to be one of the most difficult problems we encountered in the project. We had a large number of clusters without much insight into the current (or desired) state of the clusters. Due to these visibility issues, we decided to implement ArgoCD to facilitate Continuous Deployment and develop some GitOps automation to automate the administration of the cluster, with the idea that GitOps would help with the visibility issue. We had multi-tenant clusters with multiple development teams using each cluster, which meant the basic administration of a cluster, in this case, involved creating namespaces, network policies and role bindings. We started with this administration process, which was well-defined so we could deliver value quickly. It was vital in creating automated environments for developers and allowed us to see them in Git and change them later if (or when) requirements around the environments changed. The initial development gave us buy-in to the ideas and feedback on the implementation and let us upskill others in the team so they could contribute to the system. Socialising and helping build these ideas means teams can develop the skills to use GitOps throughout their work and create an automated, robust system. At Contino, we start with a small piece of work and upskill the rest of the team as we build. This dual delivery and upskilling model is part of our momentum framework.

At our client, we eventually needed to integrate our automation with the broader system. What happens when you inevitably need to integrate a GitOps automated system with a wider system? There are quite a few considerations to make. On the one hand, you have a fast-moving system containing the state of the infrastructure; on the other hand, a relatively slow-moving system comprising the GitOps scripts. From a technical point of view, the pipeline acts as the interface, which should stay mostly the same. If I call a pipeline that creates an Azure subscription that uses GitOps, the interface (the pipeline trigger and variables) should rarely change. They should be versioned like an API, with semantic versioning indicating whether the change is major, minor or a patch. The actual Git repository that contains a record of all of the subscriptions created by this automation should be on the mainline/trunk branch.

Continuous Deployment Tooling

While GitOps isn’t about tooling, mentioning the projects that enable GitOps and work well with it is useful. GitOps was born from Kubernetes as a way of making automated changes but continuing to track them through a version control system. Continuous Deployment systems (such as ArgoCD and Flux) enable GitOps by automatically deploying whatever is in the Git repository. In technical terms, they aim to bring the system's state towards the desired state, stored in the Git repository. These are tools designed to deploy Kubernetes manifests. Although that is where GitOps is popular, GitOps is not limited to Kubernetes. Crossplane is a Continuous Deployment tool that can deploy infrastructure to Azure, AWS and GCP. With Crossplane, you can use the same techniques to deploy your cloud-native applications as you use to deploy your cloud-native infrastructure. It is also possible to use Terraform for GitOps, with the Flux Terraform Controller. We have some great writing detailing the tools available and how to use them on internal developer platforms2.

Complexity and Pain Points

Configuring Continuous Deployment tools can be surprisingly complex. This is because you are taking something that is a point-in-time manifest (such as a YAML file) and then the continuous deployment tool does something to make your system match that state. We came across a few of the pain points where this reared its head. We had a process that created a Kubernetes cluster from scratch, and our Git repository contained everything required to set that Kubernetes cluster up. This meant it had an install for Istio, Cert-Manager and other projects that we needed on the Kubernetes cluster. Due to security requirements, all traffic needed to go through Istio, which included the traffic for ArgoCD (the CD tool) itself. The main problem with doing this was that if you installed ArgoCD and then Istio, ArgoCD would never have an Istio sidecar injected into it until it was restarted. We had to instead install ArgoCD, then install Istio and finally restart ArgoCD to trigger ArgoCD to run with the sidecar.

Testing: What and Where to Test

We mentioned how a high deployment frequency is one of the four main DORA metrics. More than just doing GitOps is needed to sustain it. Sustainably maintaining a high deployment frequency requires robust automated testing and validation mechanisms. Without this, it is impossible to be confident that any changes you make to the system work. The tests should also be done as early as possible, employing principles such as shift left.

Testing should start before the code is even committed. When we used Kustomize for our automation, we would do a Kustomize build as a pre-commit hook whenever any automation scripts changed. This build meant a change that broke the Kustomize build could never be committed. It means that the developer working on the automation gets instant feedback when making a commit rather than getting feedback later when their PR is created.

There should be at least two key tests:

- A test to validate that the output files (with a set of defined input parameters) match the expected output.

- A test to deploy the changes to a cluster to check that it has been deployed correctly. This is important when YAML templates are changed to make sure that they don’t only pass linting but also practically work.

On shifting left, validation of Kubernetes manifests can also be shifted left. A KubeCon 2023 talk highlighted how this was done at The New York Times using Kubeconform, improving developer productivity by finding errors earlier3.

Tradeoffs

Between Reliability and Security

Like all engineering methodologies, GitOps makes some tradeoffs between reliability and security, and there are some implicit assumptions when it is used. First of all, the pipeline or compute that is writing to the repository has a very high level of privilege, being able to write to the mainline branch. This means that you must:

- Use safeguards (branch protection or use deployments in GitHub) to prevent the pipeline (or compute) from being used without authorisation.

- Generate and store the secrets appropriately so only the pipeline (or compute) has access to them.

- Reduce the blast radius by separating different automation into different repositories or using different pipelines and path-scoped roles in the case of a mono repo.

Choosing between access controls and flexibility is the main tradeoff between security and reliability here. When it comes to path-scoped roles, this may also be required even without a mono repo, so the role deploying to production is different from the role deploying to a development or integration environment. This is not possible on some platforms but can be done using CODEOWNERS branch protection in certain paths.

With using CD Tooling

Due to the dependency between Continuous Deployment (CD) tools and GitOps, it’s also worth mentioning design tradeoffs with putting your infrastructure state in code and using CD tools. CD tools, such as ArgoCD and Flux, can increase the initial complexity due to the learning curve and difference in mindset.

The team must consider version control, branching strategy and tooling configuration. This initial investment pays off through greatly simplified operations. The reality is that the complexity must exist somewhere, and it is better to handle it up-front than to handle it down to operations teams in the future, which is what historically happened.

Such a tradeoff between initial and sustained velocity is important to highlight. The initial investment means that once implemented, it is possible to move very fast and maintain a high deployment frequency as long as there is adequate automated testing and validation.

Deletion

One situation that often gets overlooked is handling the deletion of resources. With FinOps becoming more prevalent in resolving financial headaches, increasing attention is coming to cutting cloud costs. Automatically deleting resources is as important as creating them, and it is a good litmus test to see how well a system can handle change. CD tools can usually be configured to either allow automatic deletion or to only allow manual deletion. With manual deletion all that the user needs to do is check what is being deleted and press a sync button to delete the resources. Handling deletion is up to risk appetite, and although automatic deletion can be safe with the right guardrails, it takes time to develop and should be a product choice.

Shared Responsibility Model

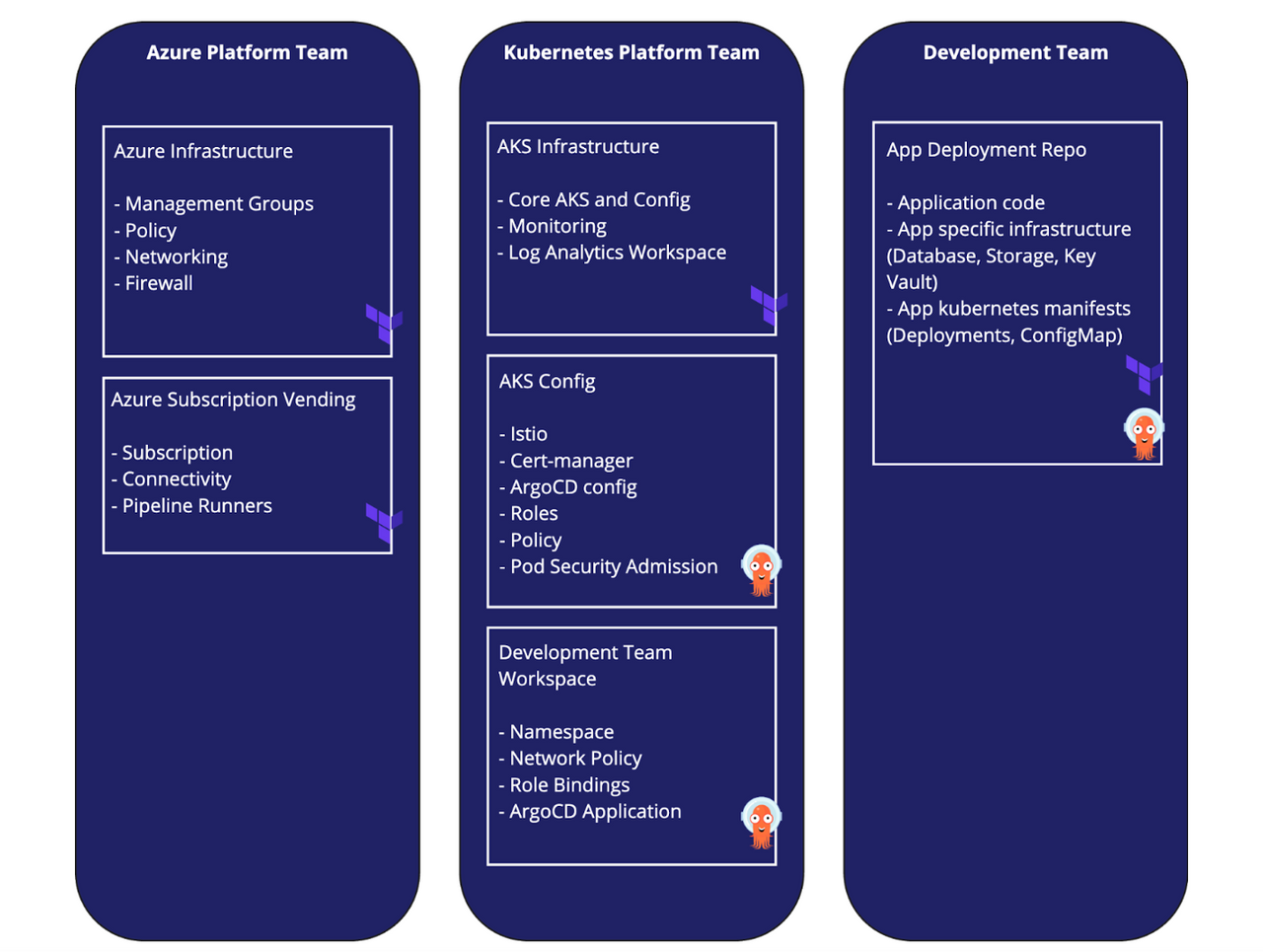

Image: GitOps teams with shared responsibility

DevOps was originally formed with the idea that Developers and Operations people should work together. The original DevOps talk given by John Allspaw and Paul Hammond really emphasised that the main idea is to come together across disciplines to build products.

We would like to set out the ideal model and responsibilities for teams using GitOps across their landscape. We believe that GitOps works best across multiple teams, with a number of GitOps experts being spread across teams.

Our diagram shows three core teams: A cloud platform team, a Kubernetes platform team and a development team. The GitOps responsibility is shared across the three teams. ArgoCD is managed by the platform team but all three of these teams can do GitOps in some capacity.

Here is a summary of how each team uses GitOps:

- Azure Platform Team: Subscription Vending (commit adds Terraform Config)

- Kubernetes Platform Team: Development Team Workspaces (commit adds Namespace, ArgoCD Application, Role Bindings, ArgoCD Application)

- Development Team: Application deployment (on release, updates deployment reference and adds expected container signature)

With team responsibility, GitOps is shared and while teams may own certain parts of it (for example subscription vending), multiple teams will interact with a tool such as ArgoCD.

Policy and the Development Cycle

Git should be used as the source of truth for both platform and development teams. It is important for the platform team to create policy as part of their feature work. For example, if you are creating a template for how teams should egress out of your cluster using Istio, part of that work should involve creating policy so that incorrectly configured objects won’t be admitted. In simpler terms, include Security Practices and Policy as part of the development cycle – Policy should be done as part of a piece of work rather than leaving other teams to guess what it should look like. It is also important to make sure that policy doesn’t override your configuration in Git.

Modern infrastructure development with GitOps allows teams to build systems that are higher quality and easier to operate. This methodology allows them to improve all DORA metrics, resulting in a higher-performing platform team. We used GitOps for a large, highly-regulated financial services client by starting with a small piece of automation and growing it, training others in the team along the way. GitOps improved our systems and quality but required a lot of underlying knowledge about DevOps principles and Git. Using GitOps means choosing some tradeoffs that reduce initial velocity but increase the velocity in the long run.

We hope that your GitOps journey is as fun and fruitful as ours has been!

1 DevOps Research and Assessment program website

2 Contino Engineering Blog: Creating your Internal Developer Platform

3 Automating Configuration and Permissions Testing for GitOps with OPA….- Eve Ben Ezra & Michael Hume