7 Ways to Move Beyond Your Lift-and-Shift Migration and Make the Most of the Cloud

Cost savings is one of the main drivers behind public cloud adoption.

CTOs hear the gospel of cloud and its promises of cost-effective agility and scalability and understandably try to get there the quickest way possible: the lift-and-shift migration.

A lift-and-shift migration is when you take your existing data center workloads and move them to the cloud without any changes.

However, a lift-and-shift migration alone will not generate target total cost of ownership (TCO) reduction and ROI. To really get the benefits of public cloud it is important to go further!

Depending on the architecture of the applications you have there are a number of actions that you can take to further optimise for cost and agility.

In this blog, I shall discuss some of the common pain points that occur after a lift-and-shift migration and seven actions that you should take to increase the value of your migration and achieve the desired cost reductions.

3 Ways Lift-and-Shift Lets You Down

How you lift and shift to the public cloud can vary: either in terms of scale (from moving one application to migrating a complete data centre) as well as in complexity, especially around network design.

Having gone through the effort of migration, organisations often find that it has not brought about all the benefits they were hoping for when a lift-and-shift model has been used in isolation.

This raises a number of pain points and I explore three common ones below:

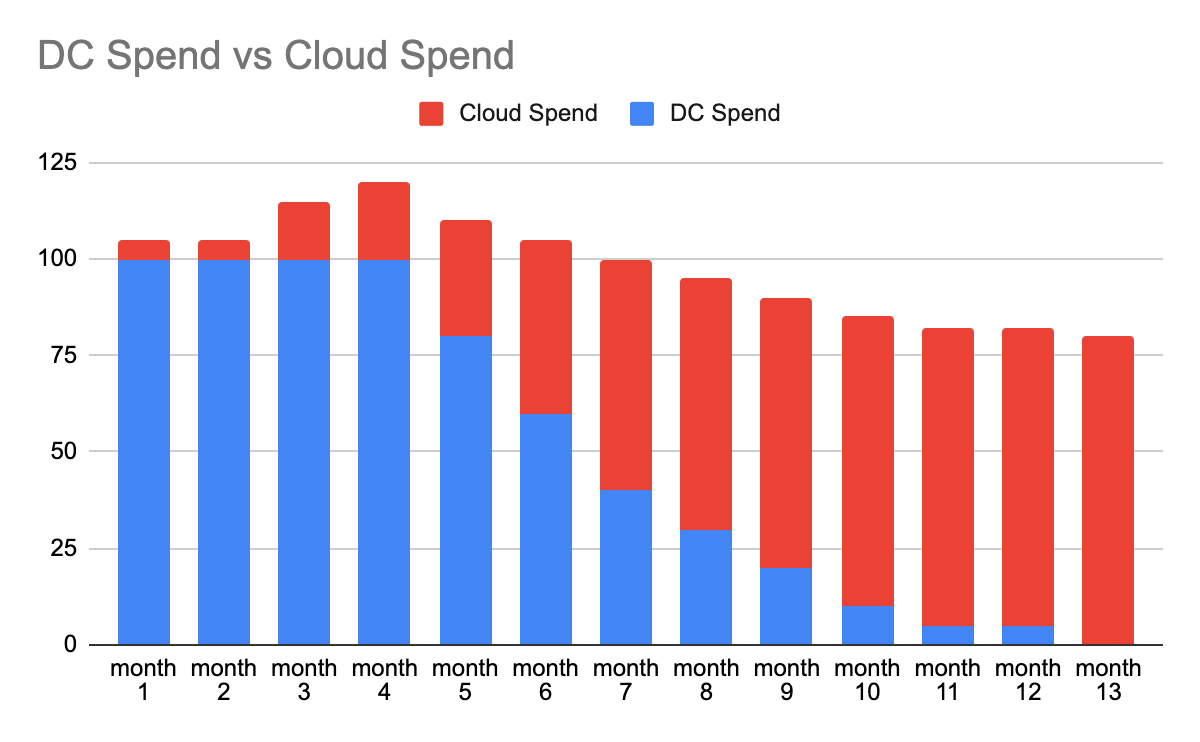

1. You aren’t achieving the cost savings you thought you would

Most lift-and-shift migrations have a business case built around achieving operating cost savings measured across hardware, software, energy and people.

What often happens with rapid lift-and-shift migrations is that the reality of having to manage systems on-premises and in the cloud during the transition phase actually creates additional costs.

The transition stage brings a peak in costs before achieving savings.

As costs rise, the emphasis shifts to achieving the migration as rapidly as possible, with minimal budget, to minimise cost duplication of running on-premises IT infrastructure and in the cloud.

But moving as fast as possible typically results in a poorly-optimised migration that incurs greater costs over the long run.

For example, rapid migration often brings the consequence of sizing VMs in the cloud as they were on-premises. But on-premise servers are sized for peak load and a similar setup in the cloud will be underutilized incurring unnecessary cost.

These items add up to a much greater spend than anticipated.

2. You just have a mess for less

I have seen too many migrations that have replicated the physical data centre in the cloud without consideration for the features, constraints and opportunities that public cloud provides. The majority of enterprise systems have evolved over time, through mergers and acquisitions, are not optimised for dynamic demand and are often very complex.

When you replicate data centre designs in the cloud, you end up with the same mess you had on-premises, just a bit cheaper. And the cloud can offer so much more than that!

A recurring example is with network design. Network teams often replicate the on-premises physical network (where you can put a firewall physically between two networks) and adopt a hard-shell, soft-centre design.

In the cloud, the network is software-defined and it enables dynamic, programmatically efficient configuration and deployment through infrastructure pipelines.

The hard-shell, soft-centre design does not work in public cloud due to its multi-tenancy model where services are provided from shared backend infrastructure.

Security models based on fixed IP addresses become more complex due to the dynamic nature of the cloud’s capabilities such as auto-scaling.

Traditional network designs also do not take advantage of features such as network security groups, gateway services and peering between networks.

This also brings about a change in the threat landscape with a number of cloud services being available on the public internet with public IP addresses and Internet access.

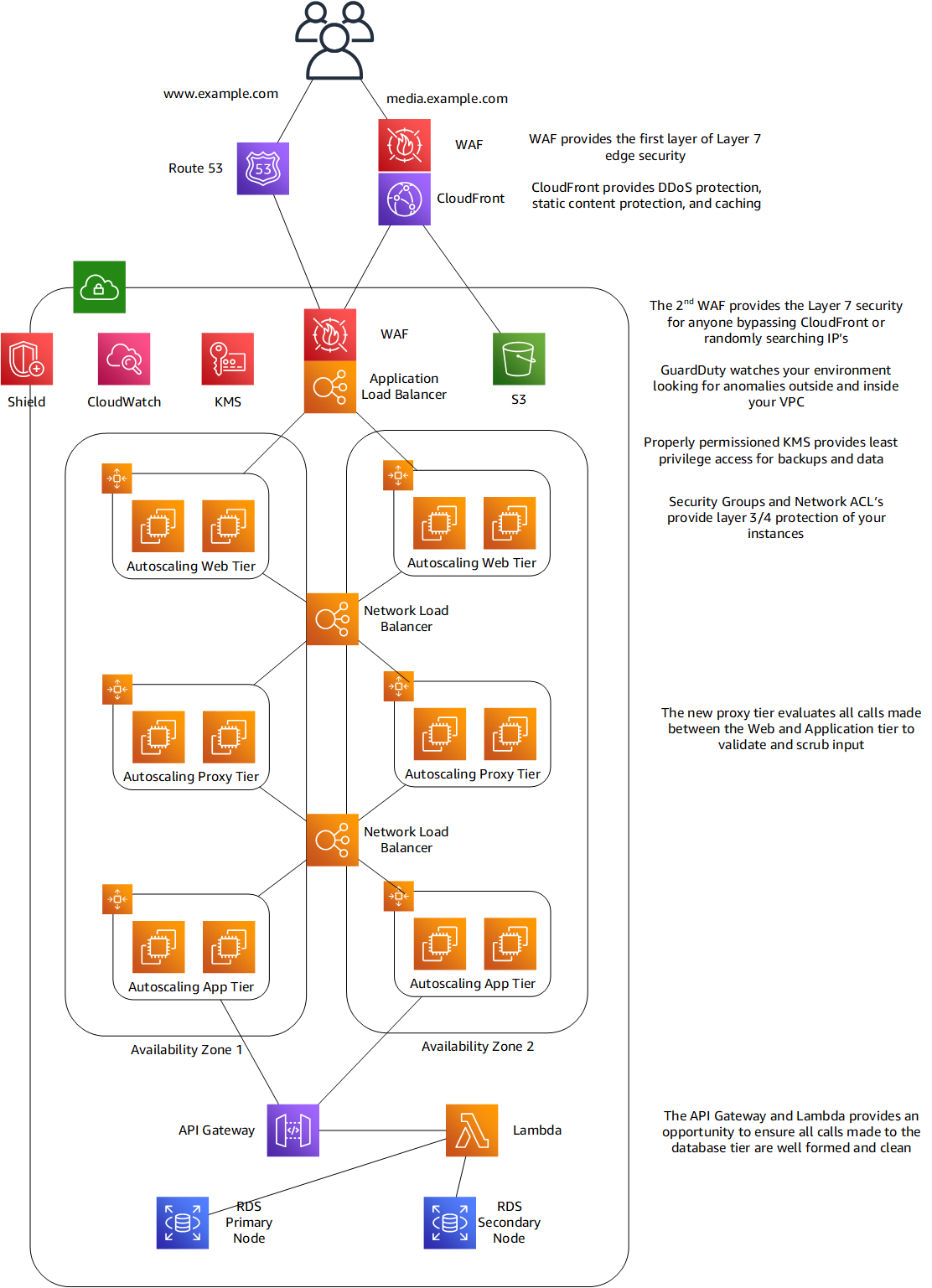

The best practice is to assume breach (that your network has been infiltrated) and design a zero-trust network in the cloud.

Below is a diagram of an example AWS Zero Trust Network Design. According to AWS, zero-trust networking is “a model where application components or microservices are considered discrete from each other and no component or microservice trusts any other. This manifests as a security posture designed to consider input from any source as potentially malicious.”

How to think about Zero Trust architectures on AWS

It should also be noted that in your physical data centre you assign fixed IP addresses and use them as control points, however due to the ephemeral nature of the cloud, IP addresses are dynamic and, unless configured otherwise, traffic is routed using fully qualified domain names. This renders controls based on IP address alone redundant and more defence in depth is needed especially when using cloud-native services.

Undertaking a lift-and-shift migration that doesn’t include these considerations often leaves you with the same mess for less.

3. You haven’t achieved business agility

You were sold on public cloud providing you with the answer to achieving the business agility needed to compete and rise above the competition.

But the agility of the public cloud is reliant on several key characteristics:

- Automation

- Scalability

- Flexibility

- Access to new services

- Global reach

- Increased uptime and information

- Developer access to infrastructure breaking down traditional silos

These characteristics mean that you can create and tear down pre-configured VMs almost instantly, opening the floodgates to rapid software delivery. The result is insane agility!

But lifting-and-shifting traditional enterprise architectures to the cloud will not provide the above benefits that architectures designed for the cloud provide.

Typical problems:

- VMs are not configured to make use of autoscaling

- Enterprise monitoring solutions need manual configuration

- Environments cannot be torn down and recreated at the click of a button

- Changes and fixes are applied to production as there is no representative non-production environment

- Environments get out of step with production creating further problems in the software development lifecycle.

Factors like these result in traditional change management processes being used in the cloud with many manual steps that prevent agility in the platform and as a result poor business agility.

7 Things You Should Do to Go Beyond Your Lift-and Shift

If these questions sound familiar or you have similar ones how do you go about solving them? What follows next is a description of seven actions that you can take to increase the value you get from your migration.

The journey to the cloud should not stop once you have completed the lift-and-shift migration. No matter the reason for triggering the migration, it provides numerous opportunities to achieve cost savings and innovate.

Here are seven actions that you should take post lift-and-shift:

1. Make immediate savings by automatically turning machines off out-of-hours

The reality of most systems, especially in non-production environments, is that they are not fully utilized.

Unless you operate globally with ‘follow the sun’ development and test teams, you can set your non-production VMs to automatically turn off and turn on at set times.

Each of the major cloud providers are charging by second for compute (CPU) this will stop spend during down time.

There are a number of ways that machines can be turned on and off:

- Machines can be turned on and off using schedules

- With events using Automation and Runbooks

- Using serverless Functions in Azure

- Lambda and CloudWatch events in AWS

- Cloud Scheduler in GCP

One thing to note is that you will still be paying for the associated machine storage when the machine is off unless it is configured to auto delete.

So how much could you save if you only run machines during the working week?

For a 40 hour working week:

405212 = 173 hours

Average hours in a month:

2475212 = 728 hours

Hours that machines could be turned off:

728 - 173 = 555 hours

555 728 = 76%

So potential savings could be up to 76% on compute spend by turning them off outside of working hours.

While this is a massive saving, it is unlikely that working patterns would allow you to achieve this level of savings and it only applies to compute spend on those machines that are in scope, e.g. development and test.

You can also make use of dynamic scaling capabilities to scale down production instances during periods when traffic and demand is lower.

Overall across all environments, the savings achieved will be more modest but still well worth the minimal effort needed.

2. Rightsize and optimise VMs

After running the migrated environment for a few months you will have accumulated sufficient usage and performance data to undertake a right-sizing exercise on VMs and databases.

The majority of lift-and-shift migrations that I have seen will do a simple CPU and RAM conversion from data centre VMs, making use of conversion tables or migrations tools provided by the cloud providers and third parties.

These provide a good start but are dependent on having a good understanding of the performance characteristics of the on-premises machines over an extended period of time and do not account for how the new cloud environment will perform.

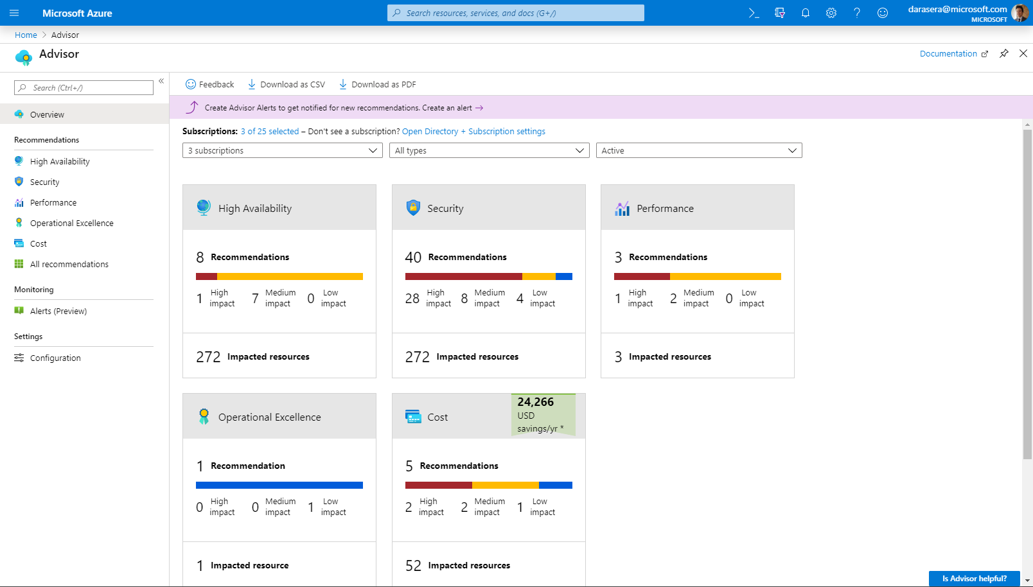

Each of the cloud providers have built in tools that help optimisation:

- Azure:

- Azure Advisor will provide recommendations on right-sizing services based on its metrics

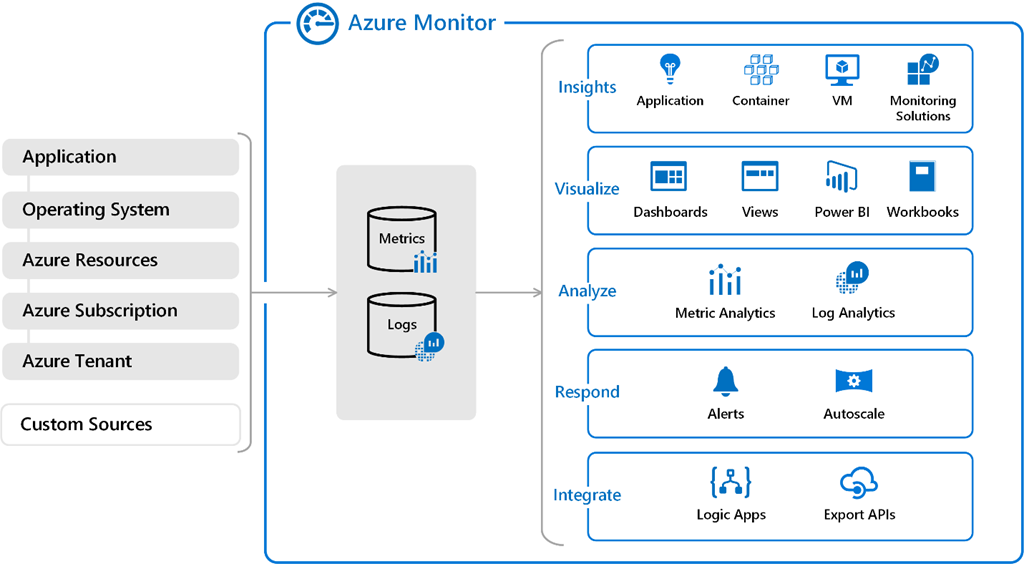

- Azure monitor captures performance metrics that can be used to build dashboards showing performance over time that can be used for optimising decisions

- AWS:

- Cost Optimiser provides recommendations on rightsizing and terminating idle EC2 instances in conjunction with CloudWatch for additional information

- GCP:

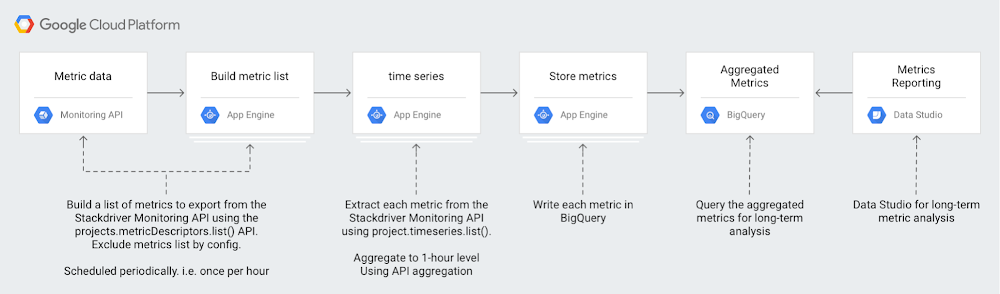

- Google Compute Engine provides rightsizing recommendations and the Compute Engine metrics can be exported to Big Query for further detailed optimisation decisions

Azure Advisor – Azure Best Practices

For example:

- Moving to the latest VM type is recommended to get improved price / performance ratio

- Altering the VM size based on the metrics over the previous months, or from the advisor tools guidance, will bring better or the same performance and achieve cost savings

- Turning off VMs that have zero utilization

My colleague Ben Saunders wrote a blog that covers this topic in much more depth: How to Optimize Your AWS Spend.

3. Make use of Reserved or Spot Instances

It is possible to further optimise cost by moving to either reserved or spot instances.

Reserved Instances commit you to a fixed period of spend, over one or two years, for additional price discounts.

Reserved Instances are a good option when you have predictable steady-state workloads.

But it is important to ensure that you are making effective use of them or you can end up paying for resources that you do not use.

Spot Instances use up excess capacity in the cloud and can be interrupted. They tend to be useful for dev/test workloads, or perhaps for adding extra computing power to large-scale data analytics projects.

- Azure provides Reserved Instances for steady-state workloads and Spot Instances that can support being interrupted

- AWS provides Reserved Instances for steady-state workloads and Spot Instances for non-mission critical workloads that can support interruption such as some non-production workloads and additional analytics engine capacity

- GCP provides Committed Use Discounts for steady-state workloads and Preemptible VMs for short-term scaling or experiments (max 24 hours)

The savings you can generate are significant:

Amazon EC2 Reserved Instances provide a significant discount (up to 72%) compared to On-Demand pricing. Spot Instances are available at up to a 90% discount compared to On-Demand prices

Azure provides savings up to 72% compared to pay-as-you-go prices—with one-year or three-year terms on Windows and Linux virtual machines. With Azure Spot Virtual Machines (Spot VMs), you’ll be able to access unused Azure compute capacity at deep discounts – up to 90% compared to pay-as-you-go prices.

Google offers savings up to 60% for reserved instances and also offers Sustained Use discounts of up to 30% when utilization is high over the monthly billing period. This offer is unique to GCP and is useful for customers that do not want to enter into a 1 or 3 year commitment. Preemptible VMs are up to 80% cheaper than regular instances.

4. Re-purpose on-premise licenses

Software licensing in the cloud takes two forms, you can either purchase them through the cloud provider or bring-your-own if you have an existing enterprise agreement.

Buying them from the cloud provider can be a good model when you only need licenses for a set period of time and do not want to enter into new enterprise agreements.

Bring-your-own can bring savings, as software licenses that were used in the data centre could be ported for use in the cloud, and depending on the software license agreements.

The majority of enterprise software vendors have a policy that allows you to port your licenses to the cloud with some constraints.

For example, Microsoft licenses can be ported to Azure if you have also purchased Software Assurance. It should be noted that if you are porting to other cloud platforms you will need to make use of dedicated hosts and have a Licence Mobility Agreement. With Hybrid Use Mobility it is possible to achieve a 40% saving on running Windows Servers in Azure by porting your on premises license.

5. Adopt FinOps to understand exactly where spend is occurring to drive optimisation decisions

Traditional Finance departments are not set up to understand and manage the transition from CapEx to OpEx for cloud finances.

A contributing factor to this is that cloud spend is variable and for most organisations in their first few years of cloud adoption will be increasing.

FinOps is an approach to managing and operating cloud spend by breaking down the silos between engineering, finance and procurement..

All of the previous optimisation options should be considered as part of your FinOps capability and by implication there is a need for a minimum level of technical skill and knowledge when understanding cloud finance.

Understanding how and where cloud spend is occurring will allow for accurate forecasting and cross charging across cost-centres.

Clarity over cloud finance enables a data-driven mechanism for measuring return on investment and alignment to business objectives.

My colleague Deepak Ramchandani Vensi has published a very interesting blog that covers FinOps in more detail: FinOps: How Cloud Finance Management Can Save Your Cloud Programme From Extinction.

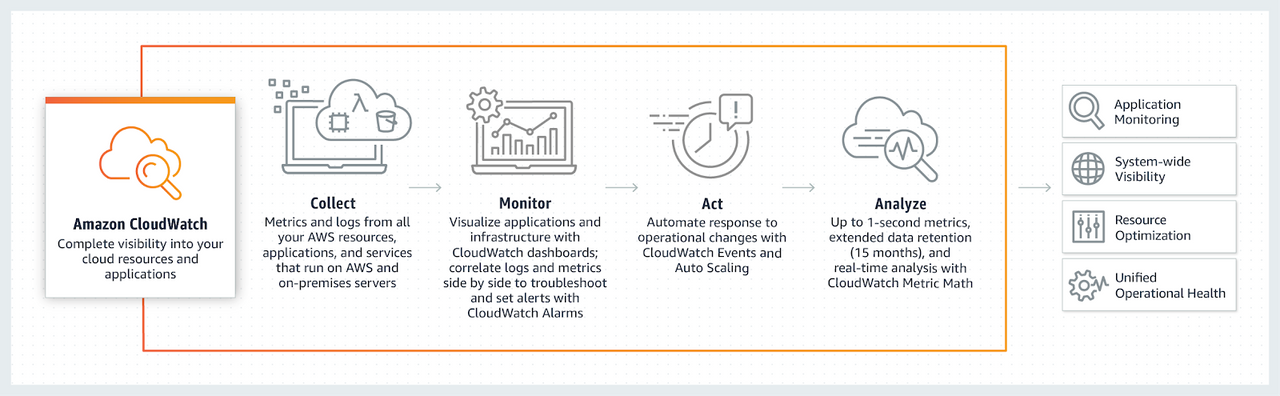

6. Make use of cloud-native monitoring and alerting tooling

Enterprise management software is expensive and requires its own infrastructure that needs to be supported.

There are very capable built-in monitoring tools in each of the public clouds.

You can move away from traditional on premises monitoring tools such as BMC or IBM and use cloud-native software or cloud provider monitoring tools.

Cloud services are instrumented by default and these modern solutions are designed to cope with the tremendous data volumes that are produced. They also come with analytical capabilities to query the data to identify key events, explore trends and anomalies, and create alerts.

There is integration into messaging services and serverless functions that can trigger automated actions.

The monitoring tools provided by the cloud providers do not require additional software to be installed reducing deployment complexity.

Info on each of the major cloud providers main monitoring and alerting tooling - AWS CloudWatch, GCP Stackdriver and AzureMonitor - are below.

Amazon CloudWatch - Application and Infrastructure Monitoring

How to use GCP Stackdriver monitoring export for long-term metric analysis

7. Rearchitect your solutions to use cloud-native and serverless services

To truly take advantage of the cloud and use it as a vehicle to achieve digital transformation and business agility you should be re-architecting your applications to make use of cloud-native and serverless technology.

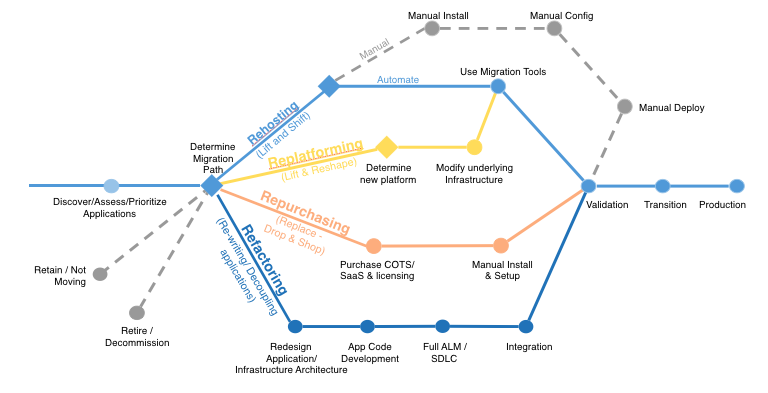

There are many approaches that can be used: AWS published six strategies in their enterprise blog on migrating to public cloud that are used across the industry.

A lift-and-shift migration is known as ‘rehosting’ in the strategy.

What is interesting is that you can apply the same assessment techniques on your migrated environment as you would to your data centre environments.

The aim is to take advantage of the value added and native services that each cloud provides.

- You can start simple by enabling auto-scaling to reduce overall spend and take advantage of the elasticity of the cloud

- You can replace third party network virtual appliances with cloud-native services, for example the Azure App Gateway with WAF

- You can re-architect your application to take advantage of containers and Kubernetes that provides an abstraction from the underlying server and provides orchestration to scale and self-heal. Each cloud provider has their native PaaS Kubernetes service that removes the machines from the customer experience reducing the operating complexity and cost. Have a read of this blog to understand the benefits of using Google's native Kubernetes service EKS: Kubernetes Is Hard: Why EKS Makes It Easier for Network and Security Architects.

My colleague David Dyke has published a great blog on Cloud Native Architecture and its benefits. Cloud-Native Architecture: What It Is and Why It Matters

Conclusion: Don’t Stop at Lift-and-Shift!

To take advantage of your lift-and-shift migration it is essential that you do not stop after migrating!

You might already recognise some of these challenges in your own organisation.

The important thing to remember is that you have already gone through the hardest part in migrating in the first place.

The good news is that by taking these 7 actions I have described you can achieve attractive cost savings rapidly with minimal change, and business agility through re-architecting.