Using BDD in SRE for True Reliability (SLOs Can’t Tell You Everything!)

Site Reliability Engineers have one central responsibility: reliability!

How they keep customers happy with a high degree of service availability is well understood: establishing SLOs with customers, enforcing them through monitoring SLIs and exercising the platform against failure through Game Days.

But sometimes what it means to be “available” is not particularly clear cut and these metrics alone may not paint a complete picture of what it means for a service to be “up”.

If a web service responds correctly within its availability SLO guidelines (say, 99.95%), but the content that’s actually served by that service is incorrect 30% of the time, then your customers are getting a poor service, that you think is great! And your engineers will likely still spend a large portion of their time fire-fighting despite their reliability dashboards looking good.

What I’d like to demonstrate in this post is a simple and transparent way of using the principles behind behavior-driven development (BDD) and acceptance testing to paint a clearer picture of what reliability means on the ground.

This kind of reliability testing gives non-reliability engineers a better understanding of availability expectations, and it shifts some of the onus of making sure that the code is reliable onto the developer. Additionally, because they are written in plain English, everyone can understand them, which means that everyone can talk about and iterate on them.

BDD and SRE: An Unexpected Power Pair

Behavior-Driven Development, or BDD, helps provide a continuous interface through which product teams and engineering can collaborate and iterate on feature development. On healthy product teams, feature development through BDD looks something like this:

- Product teams begin the conversation for a new feature with an acceptance test: a file written in English that describes what the feature is and how it should behave.

- Engineering writes a failing implementation for that acceptance test by way of step definitions, then writes code that, ultimately, makes those step definitions pass.

- Once the acceptance test for that feature passes, the code for that feature enters the release process through to production via continuous integration.

An Example of BDD in action

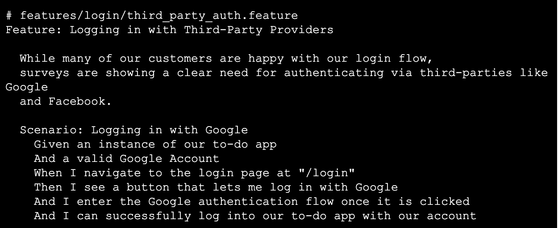

Here’s a simple example of this in action. Your company maintains a sharp-looking to-do list product. Customer feedback collected from surveys has demonstrated a clear need for integrating your login workflow with third-party OAuth providers, namely Google and Facebook. In preparation for your bi-weekly story grooming session, a product owner might author an acceptance test with Cucumber that looks like this:

Ideally, these acceptance tests would live in a separate repository since they are closer to integration tests than service-level tests. It also makes continuous acceptance testing easier to accomplish since the pipeline running the tests will only need to operate against a single repository instead of potentially-many repositories. However, using a monorepo for acceptance tests can complicate pull requests for service repositories since running an entire suite of acceptance tests for a single PR is expensive and probably unnecessary. This can be engineered around, but it requires a bit of work.

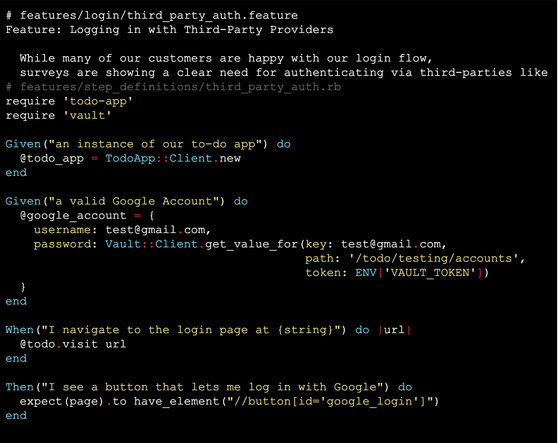

After Product and Engineering agree on the scope of this feature and its timing in the backlog, an engineer might author a failing series of step definitions for this feature, one of which might look something like this:

Once the engineer playing this story is able to make this series of step definitions pass, Engineering and Product can play the acceptance test end-to-end to confirm that the feature implemented is in the ballpark of what they were looking for. (Yay for automating QA!) Once this is agreed upon, the feature gets released into Production through their CI/CD pipelines.

An Example of BDD for Site Reliability in Action

We can employ the same tactics outlined above to define availability constraints. However, in this instance, the Reliability team would be submitting these acceptance tests instead of Product.

Let’s say that data collected from user session tracking shows that out of the 100,000 users that use our todo app on any given month, 85% of them that wait for the login page for more than five seconds leave our app, presumably to a competitor like Todoist. Because our company is backed by venture capital, growth is our company’s primary metric. Obtaining growth at any cost helps with future funding rounds that will help the company explore more expensive market plays and fund a potential IPO in the future. Thus, capturing as many of the fleeting 85% is pretty critical.

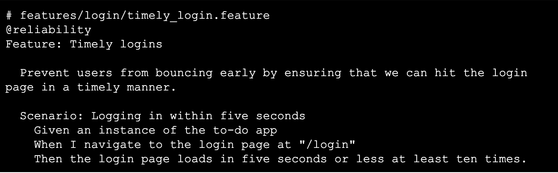

To that end, the Reliability team can write an acceptance test that looks like this:

Notice the @reliability tag at the top of this acceptance test. This tag is important, as it allows us to run our series of acceptance tests with a specific focus on reliability. Since these tests are intended to be quick, we can run them on a schedule several times per hour. If the failure rate for these tests is too high (as this rate would be a metric captured by your observability stack), then Reliability can decide to roll back or fail forward. Additionally, developers can run these tests during their local testing to gain greater confidence in releasing a reliable product and having a better sense of what “reliability” actually means.

Reliability Tests Don’t Replace Observability!

Feature testing tools like Cucumber are often used well-beyond their initial scope, largely due to how flexible they are. That said, I am not arguing for removing observability tools! Quite the contrary, in fact: I think that reliability tests compliment more granular and data-driven monitoring techniques quite nicely.

Going back to our /login example, setting a service-level objective around liveliness — whether /login returns HTTP 200/OK or not — still helps a lot in giving customers a general expectation of how available this service will be during a given period. Using feature tests to drive that will be complicated and slow, and slow metrics are guaranteed to prevent teams from hitting their SLO targets. Using near real-time monitoring against the /login service and providing a dashboard showing this service’s uptime and remaining error budget along with a widget showing the rate at which this service’s reliability tests are passing tells a fuller story of its healthiness.

Wrapping Up

In sum, setting SLOs and chasing SLIs are tenets most SREs understand well. However, these metrics alone may not paint a complete picture of what it means for a service to be “up.”

Adding BDD to your SRE mix can help to ensure you aren’t being fooled by your dashboards and patting yourself on the back for a job well done, while really you’re delivering a sub-par service to your customers!

Give it a try!